Core Concepts

Loading

A guide on how to handle loading various media resources.

Sizing

Section titled SizingBy default, the browser will use the intrinsic size of the loaded media to set the dimensions of the provider. As media loads over the network, the element will jump from the default size to the intrinsic media size, triggering a layout shift which is a poor user experience indicator for both your users and search engines (i.e., Google).

Aspect Ratio

To avoid a layout shift, we recommend setting the aspect ratio like so:

Ideally the ratio set should match the ratio of the media content itself (i.e., intrinsic aspect ratio) otherwise you’ll end up with a letterbox template (empty black bars on the left/right of the media).

Specify Dimensions

If you’d like to be more specific for any reason, you can specify the width and height of the

player simply using CSS like so:

Load Strategies

Section titled Load StrategiesA loading strategy specifies when media or the poster image should begin loading. Loading media too early can effectively slow down your entire application, so choose wisely.

The following media loading strategies are available:

eager: Load media immediately - use when media needs to be interactive as soon as possible.idle: Load media once the page has loaded and therequestIdleCallbackis fired - use when media is lower priority and doesn’t need to be interactive immediately.visible: Load media once it has entered the visual viewport - use when media is below the fold and you prefer delaying loading until it’s required.play: Load the provider and media on play - use when you want to delay loading until interaction.custom: Load media when thestartLoading()/startLoadingPoster()method is called or themedia-start-loading/media-start-loading-posterevent is dispatched - use when you need fine control of when media should begin loading.

The poster load strategy specifies when the poster should begin loading. Poster loading is separate

from media loading so you can display an image before media is ready for playback. This

generally works well in combination with load="play" to create thumbnails.

Custom Strategy

Section titled Custom StrategyA custom load strategy lets you control when media or the poster image should begin loading:

View Type

Section titled View TypeThe view type suggests what type of media layout will be displayed. It can be either audio or

video. This is mostly to inform layouts, whether your own or the defaults, how to appropriately

display the controls and general UI. By default, the view type is inferred from the provider and

media type. You can specify the desired type like so:

Stream Type

Section titled Stream TypeThe stream type refers to the mode in which content is delivered through the video player. The player will use the type to determine how to manage state/internals such as duration updates, seeking, and how to appropriately present UI components and layouts. The stream type can be one of the following values:

on-demand: Video on Demand (VOD) content is pre-recorded and can be accessed and played at any time. VOD streams allow viewers to control playback, pause, rewind, and fast forward.live: Live streaming delivers real-time content as it happens. Viewers join the stream and watch the content as it’s being broadcast, with limited control over playback.live:dvr: Live DVR (Live Digital Video Recording) combines the features of both live and VOD. Viewers can join a live stream and simultaneously pause, rewind, and fast forward, offering more flexibility in watching live events.ll-live: A live streaming mode optimized for reduced latency, providing a near-real-time viewing experience with minimal delay between the live event and the viewer.ll-live:dvr: Similar to low-latency live, this mode enables viewers to experience live content with minimal delay while enjoying the benefits of DVR features (same aslive:dvr).

If the value is not set, it will be inferred by the player which can be less accurate (e.g., at identifying DVR support). When possible, prefer specifying it like so:

Duration

Section titled DurationBy default, the duration is inferred from the provider and media. It’s always best to provide the duration when known to avoid any inaccuracies such as rounding errors, and to ensure UI is set to the correct state without waiting on metadata to load. You can specify the exact duration like so:

Clipping

Section titled ClippingClipping allows shortening the media by specifying the time at which playback should start and end.

- You can set a clip start time or just an end time, both are not required.

- The media duration and chapter durations will be updated to match the clipped length.

- Any media resources such as text tracks and thumbnails should use the full duration.

- Seeking to a new time is based on the clipped duration. For example, if a 1 minute video is clipped to 30 seconds, seeking to 30s will be the end of the video.

- Media URI Fragments are set internally to efficiently load audio and video files

between the clipped start and end times (e.g.,

/video.mp4#t=30,60).

Media Session

Section titled Media SessionThe Media Session API is automatically set using the provided title,

artist, and artwork (poster is used as fallback) player properties.

Storage

Section titled StorageStorage enables saving player and media settings so that the user can resume where they left off. This includes saving and initializing on load settings such as language, volume, muted, captions visibility, and playback time.

Local Storage

Section titled Local StorageLocal Storage enables saving data locally on the user’s browser. This is a simple and fast option for remembering player settings, but it won’t persist across domains, devices, or browsers.

Provide a storage key prefix for turning local storage on like so:

Extending Local Storage

Optionally, you can extend and customize local storage behaviour like so:

Remote Storage

Section titled Remote StorageRemote Storage enables asynchronously saving and loading data from anywhere. This is great as settings willl persist across user sessions even if the domain, device, or browser changes. Generally, you will save player/media settings to a remote database based on the currently authenticated user.

Implement the MediaStorage interface and provide it to the player like so:

Sources

Section titled SourcesThe player can accept one or more media sources which can be a string URL of the media resource

to load, or any of the following objects: MediaStream, MediaSource, Blob, or File.

Single Source

Multiple Source Types

The list of supported media formats varies from one browser to the other. You should either provide your source in a single format that all relevant browsers support, or provide multiple sources in enough different formats that all the browsers you need to support are covered.

Source Objects

Section titled Source ObjectsThe player accepts both audio and video source objects. This includes MediaStream, MediaSource, Blob, and File.

Changing Source

Section titled Changing SourceThe player supports changing the source dynamically. Simply update the src property when you

want to load new media. You can also set it to an empty string "" to unload media.

Source Types

Section titled Source TypesThe player source selection process relies on file extensions, object types, and type hints to determine which provider to load and how to play a given source. The following is a table of supported media file extensions and types for each provider:

The following are valid as they have a file extension (e.g, video.mp4) or type

hint (e.g., video/mp4):

src="https://example.com/video.mp4"src="https://example.com/hls.m3u8"src="https://example.com/dash.mpd"src = { src: "https://example.com/video", type: "video/mp4" }src = { src: "https://example.com/hls", type: "application/x-mpegurl" }src = { src: "https://example.com/dash", type: "application/dash+xml" }

The following are invalid as they are missing a file extension and type hint:

src="https://example.com/video"src="https://example.com/hls"src="https://example.com/dash"

Source Sizes

Section titled Source SizesYou can provide video qualities/resolutions using multiple video files with different sizes (e.g, 1080p, 720p, 480p) like so:

We strongly recommend using adaptive streaming protocols such as HLS over providing multiple static media files, see the Video Qualities section for more information.

Supported Codecs

Section titled Supported CodecsVidstack Player relies on the native browser runtime to handle media playback, hence it’s

important you review what containers and codecs are supported by them. This also applies to

libraries like hls.js and dash.js which we use for HLS/DASH playback in

browsers that don’t support it natively.

While there are a vast number of media container formats, the ones listed below are the ones you are most likely to encounter. Some support only audio while others support both audio and video. The most commonly used containers for media on the web are probably MPEG-4 (MP4), Web Media File (WEBM), and MPEG Audio Layer III (MP3).

It’s important that both the media container and codecs are supported by the native runtime. Please review the following links for what’s supported and where:

Providers

Section titled ProvidersProviders are auto-selected during the source selection process and

dynamically loaded via a provider loader (e.g., VideoProviderLoader). The following providers

are supported at this time:

See source types for how to ensure the correct media provider is loaded.

Provider Events

Section titled Provider EventsThe following events will fire as providers change or setup:

Provider Types

Section titled Provider TypesThe following utilities can be useful for narrowing the type of a media provider:

Audio Tracks

Section titled Audio TracksAudio tracks are loaded from your HLS playlist. You can not manually add audio tracks to the player at this time. See the Audio Tracks API guide for how to interact with audio track programmatically.

Text Tracks

Section titled Text TracksText tracks allow you to provide text-based information or content associated with video or audio. These text tracks can be used to enhance the accessibility and user experience of media content in various ways. You can provide multiple text tracks dynamically like so:

See the Text Tracks API guide for how to interact with text tracks programmatically.

Text Track Default

Section titled Text Track DefaultWhen default is set on a text track it will set the mode of that track to showing immediately. In

other words, this track is immediately active. Only one default is allowed

per track kind.

Text Track Formats

Section titled Text Track FormatsThe vidstack/media-captions library handles loading, parsing, and rendering captions inside of the player. The following caption formats are supported:

See the links provided for more information and any limitations. Do note, all caption formats are mapped to VTT which is extended to support custom styles. In addition, browsers or providers may also support loading additional text tracks. For example, Safari and the HLS provider will load captions embedded in HLS playlists.

You can specify the desired text track format like so:

Text Track Kinds

Section titled Text Track KindsThe following text track kinds are supported:

subtitles: Provides a written version of the audio for non-native speakers.captions: Includes dialog and descriptions of important audio elements, like music or sound effects.chapters: Contains information (e.g, title and start times) about the different chapters or sections of the media file.descriptions: Provides information about the visual content to assist individuals who are blind or visually impaired.metadata: Additional information or descriptive data within a media file. This metadata can be used for various purposes, like providing descriptions, comments, or annotations related to the media content. It is not displayed as subtitles or captions but serves as background information that can be used for various purposes, including search engine optimization, accessibility enhancements, or supplementary details for the audience.

JSON Tracks

Section titled JSON TracksJSON content can be provided directly to text tracks or loaded from a remote location like so:

Example JSON text tracks:

Example JSON cues:

Example JSON regions and cues:

LibASS

Section titled LibASSWe provide a direct integration for a WASM port of libass if you’d like to use advanced ASS features that are not supported.

-

npm i jassub -

Copy the

node_modules/jassub/distdirectory to your public directory (e.g,public/jassub) -

Add the

LibASSTextRendererto the player like so:

See the JASSUB options for how to further configure the LibASS renderer.

Thumbnails

Section titled ThumbnailsThumbnails are small, static images or frames extracted from the video or audio content. These images serve as a visual preview or representation of the media content, allowing users to quickly identify and navigate to specific points within the video or audio. Thumbnails are often displayed in the time slider or chapters menu; enabling users to visually browse and select the part of the content they want to play.

Usage

Section titled UsageThumbnails can be loaded using the Thumbnail component or useThumbnails hook.

They’re also supported out the box by the Default Layout and

Plyr Layout.

Thumbnails are generally provided in the Web Video Text Tracks (WebVTT) format. The WebVTT file specifies the time ranges of when to display images, with the respective image URL and coordinates (only required if using a sprite). You can refer to our thumbnails example to get a better idea of how this file looks.

Sprite

Sprites are large storyboard images that contain multiple small tiled thumbnails. They’re preferred over loading multiple images because:

- Sprites reduce total file size due to compression.

- Avoid loading delays for each thumbnail.

- Reduce the number of server requests.

The WebVTT file must append the coordinates of each thumbnail like so:

Multiple Images

Sprites should generally be preferred but in the case you only have multiple individual thumbnail images, they can be specified like so:

Thumbnails can be loaded as a JSON file. Ensure the Content-Type header is

set to application/json on the response. The returned JSON can be VTT cues, an array of images, or

a storyboard.

Mux storyboards are supported out of the box:

Example JSON VTT:

Example JSON images:

Example JSON storyboard:

Object

Section titled ObjectExample object with multiple images:

Example storyboard object:

Provide objects directly like so:

Video Qualities

Section titled Video QualitiesAdaptive streaming protocols like HLS and DASH not only enable streaming media in chunks, but also have the ability to adapt playback quality based on the device size, network conditions, and other information. Adaptive qualities is important for speeding up initial delivery and to avoid loading excessive amounts of data which cause painful buffering delays.

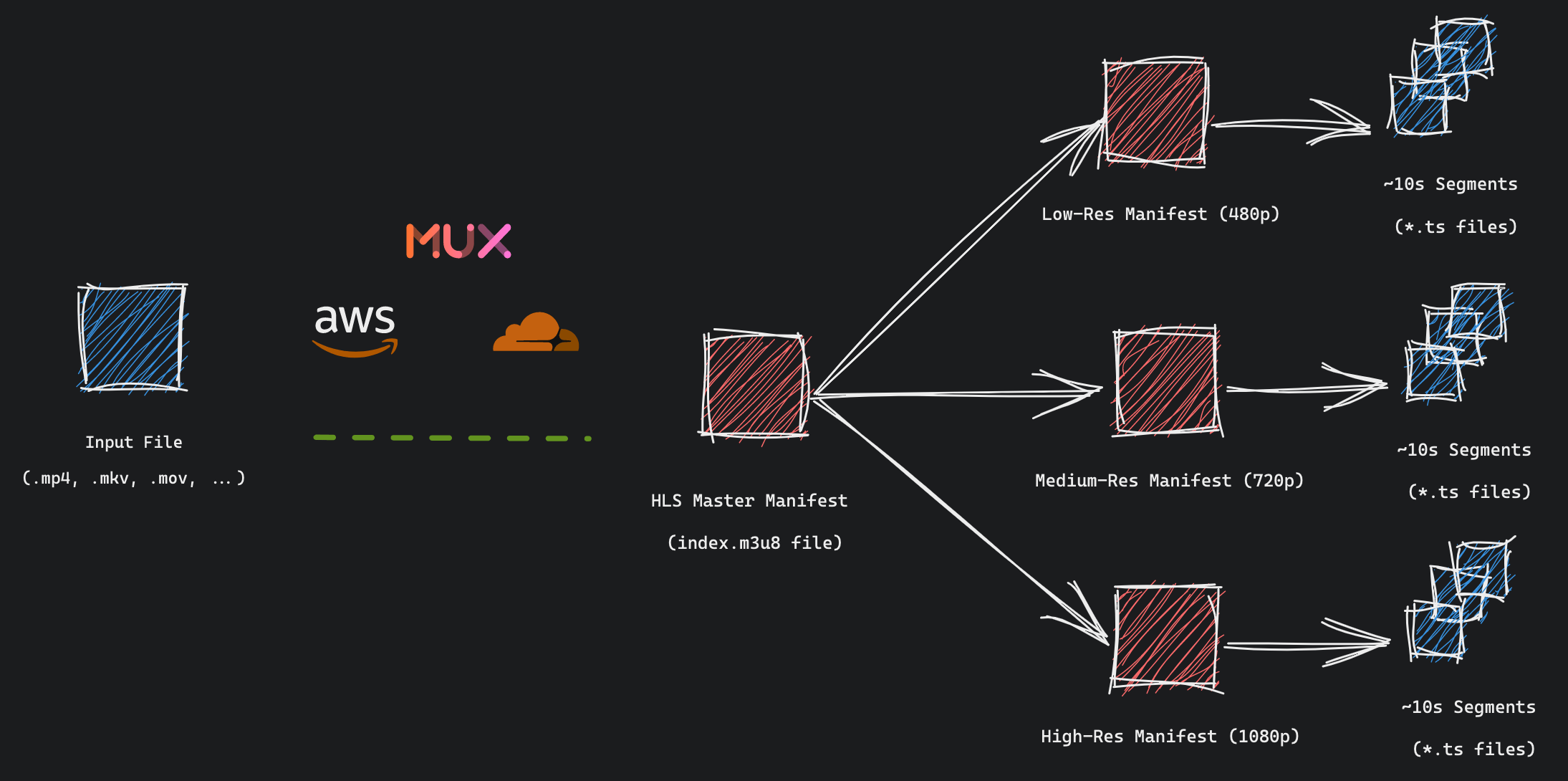

Video streaming platforms such as Cloudflare Stream and Mux will take an input video file (e.g., awesome-video.mp4) and create multiple renditions out of the box for you, with multiple resolutions (width/height) and bit rates:

By default, the best quality is automatically selected by the streaming engine such as hls.js or dash.js. You’ll usually see this as an “Auto” option in the player quality menu. It can also be manually set if the engine is not making optimal decisions, as they’re generally more conservative to avoid excessive bandwidth usage.

Once you have your HLS or DASH playlist by either creating it yourself using FFMPEG or using a streaming provider, you can pass it to the player like so:

See the Video Qualities API guide for how to interact with renditions programmatically.